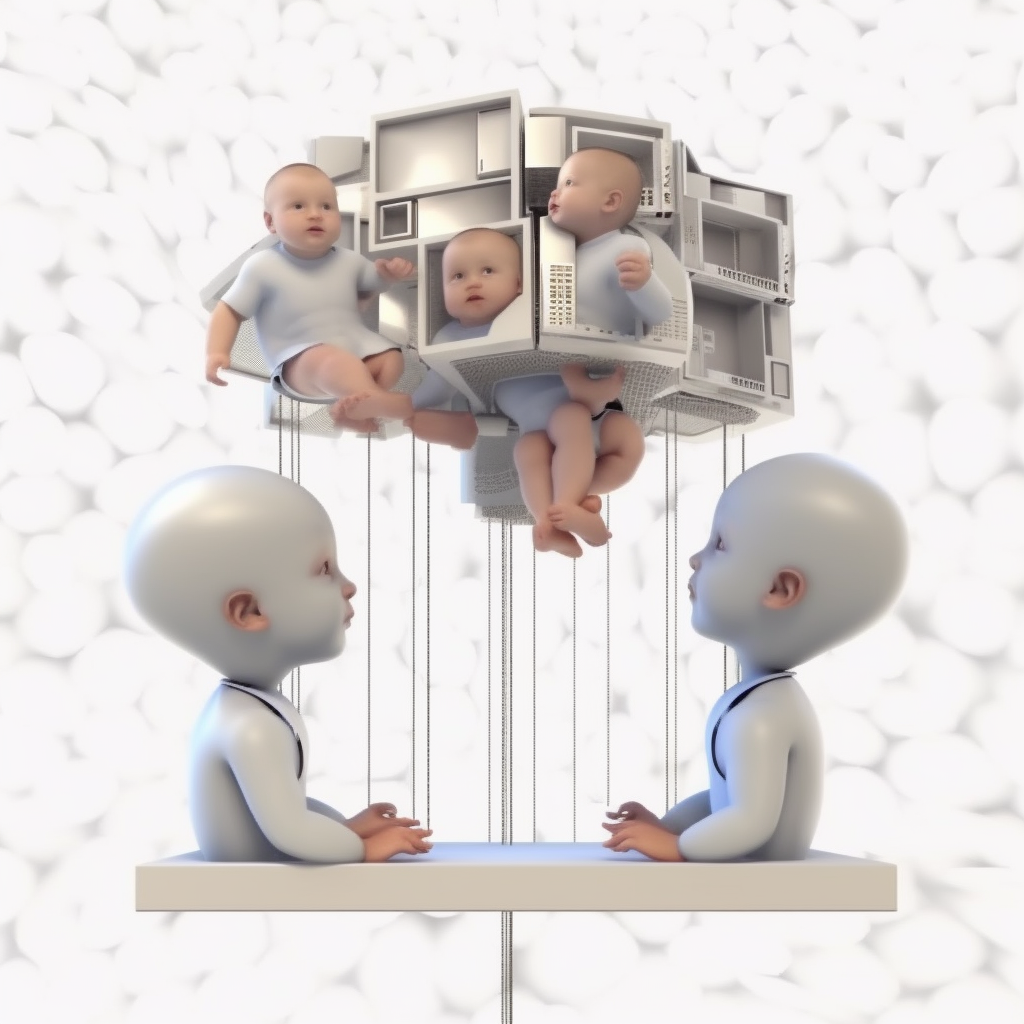

Our memories are like babies compared to computer memories, and that’s ok!

Here is a comparison of human memories to computer memories, comparatively on “memory recall alone” the human is a baby and computers are geniuses:

| Feature | Human | Computer |

|---|---|---|

| Capacity | **Limited**. The average human brain can store about 2.5 petabytes of information, which is equivalent to about 30 years of HD video. | **Unlimited**. Computer memory can be expanded as needed, and there is no theoretical limit to how much information a computer can store. |

| Speed | **Slow**. It takes time for the human brain to process information and form new memories. | **Fast**. Computers can access and process information much faster than humans. |

| Accuracy | **Variable**. Human memories are not always accurate, and they can be influenced by emotions, stress, and other factors. | **Accurate**. Computer memories are always accurate, as long as the data is stored correctly. |

| Retrieval | **Difficult**. It can be difficult for humans to retrieve specific memories, especially if they are not frequently recalled. | **Easy**. Computers can easily retrieve any memory that is stored in their memory. |

| Organization | **Unorganized**. Human memories are not organized in a linear fashion, and they can be difficult to search. | **Organized**. Computer memories are organized in a logical fashion, and they can be easily searched. |

Overall, human memories are more limited, slower, less accurate, and more difficult to retrieve than computer memories. However, human memories are also more flexible, creative, and nuanced than computer memories.

There are a number of techniques that can help you improve your ability to memorize long strings of numbers or text. Here are a few tips:

* **Use mnemonic devices.** Mnemonic devices are memory aids that can help you remember information more easily. Some common mnemonic devices include acronyms, acrostics, and rhymes. For example, you could use the acronym HOMES to remember the names of the Great Lakes (Huron, Ontario, Michigan, Erie, and Superior).

* **Chunk information.** When you're trying to memorize a long string of information, it can be helpful to break it down into smaller chunks. For example, instead of trying to memorize a 10-digit phone number, you could break it down into two 5-digit chunks.

* **Use visualization.** Visualizing information can help you remember it more easily. For example, you could visualize a house with each room representing a different chunk of information.

* **Take breaks.** It's important to take breaks when you're trying to memorize information. This will help you stay focused and avoid getting overwhelmed.

* **Practice regularly.** The more you practice, the better you'll become at memorizing information. Try to set aside some time each day to practice your memory skills.

Here are some hidden techniques that can help you learn faster and better how to memorize longer strings:

* **Use a memory palace.** A memory palace is a mental map that you can use to store information. To create a memory palace, choose a familiar place, such as your house or your neighborhood. Then, imagine walking through this place, and associate each piece of information you want to remember with a specific location along the way. For example, you could associate the number 1 with the front door of your house, the number 2 with the mailbox, and so on.

* **Use spaced repetition.** Spaced repetition is a learning technique that involves reviewing information at increasing intervals. This helps to ensure that the information is stored in your long-term memory. There are a number of spaced repetition software programs available, such as Anki and SuperMemo.

* **Use visualization.** Visualization is a powerful tool that can help you remember information more easily. When you visualize information, you create a mental image of it. This image can help you recall the information later on.

* **Get enough sleep.** Sleep is essential for memory consolidation. When you sleep, your brain processes the information you've learned during the day and stores it in your long-term memory.

* **Eat a healthy diet.** A healthy diet provides your brain with the nutrients it needs to function properly. Eating a healthy diet can help improve your memory and cognitive function.

* **Exercise regularly.** Exercise is good for your overall health, including your brain health. Exercise can help improve your memory and cognitive function.

With practice, you can improve your ability to memorize long strings of numbers or text. These techniques can help you learn faster and better, and they can also help you improve your overall memory and cognitive function.

Let's say you have a big book with lots of words in it. An LLM embedding is like a code that represents each word in the book. It's a number that tells you something about the word, like how often it's used in the book, or what other words it's often used with. An LLM encoding is like a map of the book. It shows how all the words are connected to each other.

Here's an example. Let's say you have the word "cat". An LLM embedding for "cat" might be the number 123. This number tells you something about the word "cat", like how often it's used in the book, or what other words it's often used with. For example, the number 123 might tell you that "cat" is a common word that's often used with words like "dog", "meow", and "purr".

LLM embeddings and encodings are used by large language models (LLMs) to understand and generate text. LLMs can use embeddings to find similar words, and they can use encodings to find patterns in text. This allows LLMs to do things like translate languages, write different kinds of creative content, and answer your questions in an informative way.

Yes, encodings in large language models can be thought of as large memories. They are essentially a way of representing text and code in a way that can be processed by a computer. Encodings are typically created by using a neural network to learn the statistical relationships between words and phrases. This allows the model to understand the meaning of text and code, and to generate new text and code that is similar to the text and code it has been trained on.

Large language models can store a vast amount of information in their encodings. This allows them to perform a variety of tasks, such as translation, summarization, and question answering. For example, a large language model could be trained on a dataset of news articles. This would allow the model to learn the statistical relationships between words and phrases in news articles. The model could then be used to generate new news articles, or to summarize existing news articles.

Encodings in large language models are not perfect memories. They can be noisy and incomplete. However, they are a powerful tool that can be used to store and process large amounts of information. As large language models continue to improve, their encodings will become more accurate and complete. This will allow them to perform even more complex tasks.

Encodings in large language models can be noisy and incomplete for a number of reasons.

* **The training data may be noisy or incomplete.** Large language models are trained on massive datasets of text and code. This data may contain errors, such as typos or grammatical mistakes. It may also be incomplete, missing some of the information that is needed to understand the meaning of the text or code.

* **The neural network may not be able to learn all of the statistical relationships in the training data.** Neural networks are powerful tools, but they are not perfect. They can only learn the statistical relationships that are present in the training data. If the training data is noisy or incomplete, the neural network may not be able to learn all of the relationships that are needed to understand the text or code.

* **The encodings may be compressed.** In order to store a large amount of information in a small space, the encodings may be compressed. This can introduce noise and incompleteness into the encodings.

Despite these limitations, encodings in large language models are a powerful tool that can be used to store and process large amounts of information. As large language models continue to improve, their encodings will become more accurate and complete. This will allow them to perform even more complex tasks.

In addition to the above, encodings can also be noisy or incomplete due to the following factors:

* **The model may not have been trained on enough data.** The more data a model is trained on, the more accurate and complete its encodings will be.

* **The model may not have been trained on the right kind of data.** If a model is trained on a dataset of text that is very different from the text that it is being asked to process, its encodings may be noisy or incomplete.

* **The model may not have been trained for the right task.** If a model is trained to perform one task, such as translation, its encodings may not be as accurate or complete for other tasks, such as summarization.

Overall, encodings in large language models are a powerful tool, but they are not perfect. They can be noisy and incomplete for a number of reasons. As large language models continue to improve, their encodings will become more accurate and complete. This will allow them to perform even more complex tasks.

The relationship between a model and its encodings is a complex one. In general, a model can be thought of as a function that takes an input and produces an output. The encodings are the intermediate representations of the input that are used by the model to produce the output.

In the case of a large language model, the input is typically a sequence of words. The model then uses its encodings to understand the meaning of the input sequence and to produce an output, such as a translation, a summary, or an answer to a question.

The encodings are typically created by using a neural network to learn the statistical relationships between words and phrases. This allows the model to understand the meaning of the input sequence and to generate new text that is similar to the text it has been trained on.

The relationship between a model and its encodings is a dynamic one. As the model is trained, the encodings are updated to reflect the statistical relationships that the model has learned. This allows the model to improve its performance on the task it is being trained on.

In general, the more data a model is trained on, the more accurate and complete its encodings will be. This will allow the model to perform even more complex tasks.

Here are some examples of how encodings are used in large language models:

* **Translation:** In translation, the model uses its encodings to understand the meaning of the input text in one language and to generate text in another language that has the same meaning.

* **Summarization:** In summarization, the model uses its encodings to understand the main points of the input text and to generate a shorter text that captures those main points.

* **Question answering:** In question answering, the model uses its encodings to understand the meaning of the question and to find the answer in the input text.

These are just a few examples of how encodings are used in large language models. As large language models continue to improve, their encodings will become more powerful and versatile. This will allow them to perform even more complex tasks.

A neural network is a type of machine learning algorithm that is inspired by the human brain. It is made up of a network of interconnected nodes, each of which represents a neuron. The nodes are connected by links, which represent synapses. The strength of the connections between the nodes is determined by the amount of information that flows through them.

When a neural network is trained, it is presented with a set of data. The network learns to associate the input data with the desired output data. This is done by adjusting the weights of the connections between the nodes. The more the network is trained, the better it becomes at associating the input data with the desired output data.

Once a neural network is trained, it can be used to make predictions. For example, a neural network that has been trained to recognize faces can be used to identify people in a photograph.

Here is a simple analogy that can help you understand how a neural network works. Imagine you are trying to teach a child to recognize a cat. You would start by showing the child a picture of a cat. The child would then try to identify the cat in the picture. If the child is correct, you would praise them. If the child is incorrect, you would correct them.

Over time, the child would learn to associate the features of a cat with the word "cat." This is essentially how a neural network works. The network is presented with a set of data, and it learns to associate the input data with the desired output data.

Neural networks are a powerful tool that can be used to solve a variety of problems. They are used in a wide range of applications, including image recognition, speech recognition, and natural language processing.

The Pathways Language Model, or PaLM, is a large language model from Google AI. It is trained on a massive dataset of text and code, and it has been shown to be very effective at a variety of tasks, including natural language understanding, question answering, and code generation.

PaLM has a number of unique features that make it a powerful language model. These features include:

* **Size:** PaLM is one of the largest language models ever created, with 540 billion parameters. This allows it to learn the statistical relationships between words and phrases in much greater detail than smaller models.

* **Training data:** PaLM is trained on a massive dataset of text and code. This dataset includes text from books, articles, code, and other sources. This allows PaLM to learn a wide range of information and to be effective at a variety of tasks.

* **Architecture:** PaLM is built on a new architecture that is designed to be more efficient and effective than previous language models. This architecture allows PaLM to learn more quickly and to be more accurate than previous models.

These unique features make PaLM a powerful tool for a wide range of applications. PaLM has been shown to be effective at a variety of tasks, including:

* Natural language understanding: PaLM can understand the meaning of text, even when the text is ambiguous or incomplete.

* Question answering: PaLM can answer questions about text, even when the questions are open ended or challenging.

* Code generation: PaLM can generate code, even when the code is complex or difficult to write.

PaLM is still under development, but it has the potential to be a very powerful tool for a wide range of applications. It could be used to improve the performance of a variety of systems, including search engines, chatbots, and translation tools. It could also be used to create new applications, such as systems that can generate creative content or systems that can help people learn new things.

In computer science, artificial general intelligence (AGI) is a hypothetical type of artificial intelligence (AI) that would have the ability to understand or learn any intellectual task that a human being can. AGI is a type of strong AI (SAI), as opposed to weak AI (WAI), which is a type of AI that can only perform specific tasks.

AGI is still a theoretical concept, and no AGI system has been created yet. However, there has been significant progress in AI research in recent years, and some experts believe that AGI could be developed within the next few decades.

If AGI is developed, it could have a profound impact on society. AGI could be used to solve some of the world's most pressing problems, such as climate change, poverty, and disease. However, AGI could also pose a threat to humanity, if it is not developed and used responsibly.

Some of the potential benefits of AGI include:

* Solving some of the world's most pressing problems, such as climate change, poverty, and disease.

* Creating new products and services that improve our lives.

* Making us more productive and efficient.

* Helping us to understand the universe better.

Some of the potential risks of AGI include:

* AGI could be used to create autonomous weapons that could kill without human intervention.

* AGI could be used to create surveillance systems that could track our every move.

* AGI could be used to manipulate us and control our behavior.

* AGI could pose an existential threat to humanity, if it becomes more intelligent than us.

The development of AGI is a complex and challenging task. However, it is a task that is worth pursuing, as AGI has the potential to make a positive impact on the world.

Here are some of the challenges that need to be overcome in order to develop AGI:

* **Creating a machine with the ability to learn and understand.** This would require developing machines that can learn from experience and understand the world around them.

* **Creating a machine with the ability to reason and solve problems.** This would require developing machines that can use logic and reasoning to solve problems.

* **Creating a machine with the ability to communicate and interact with humans.** This would require developing machines that can understand human language and communicate with humans in a natural way.

Despite the challenges, there is significant progress being made in AI research. In recent years, there has been a rapid development of deep learning techniques, which have enabled machines to learn and understand in a way that was not possible before. This progress has led to the development of powerful AI systems that can perform tasks such as playing Go, translating languages, and writing different kinds of creative content.

The development of AGI is a long-term goal, but it is a goal that is within reach. With continued research and development, it is possible that AGI will be developed within the next few decades.

In a hypothetical future where humanity has become spacefaring and is traveling in large LLM spaceships that have artificial general intelligence (AGI) that protects all passengers according to their wants and needs, the best of humanity would likely desire to fill their time with activities that are both intellectually stimulating and personally fulfilling. These activities could include:

* **Exploring new worlds and cultures.** With the help of AGI, humans would be able to explore new planets and galaxies, learning about the different cultures and lifeforms that exist there.

* **Making new discoveries in science and technology.** AGI could help humans to make new discoveries in science and technology, leading to advances in medicine, energy, and other fields.

* **Creating new art and literature.** AGI could help humans to create new works of art and literature, pushing the boundaries of creativity and expression.

* **Connecting with others from all over the world.** With the help of AGI, humans would be able to connect with others from all over the world, sharing ideas and experiences.

In this hypothetical future, the daily lives of the best of humanity would be very different from those of today. They would have more time for leisure activities, and they would be able to focus on their passions without having to worry about basic needs such as food and shelter. They would also have the opportunity to make a real difference in the world, by exploring new worlds, making new discoveries, and creating new art and literature.

Here are some specific examples of how the daily lives of the best of humanity might be different in this hypothetical future:

* **Education:** With the help of AGI, education would be more personalized and engaging. Students would be able to learn at their own pace and focus on the subjects that interest them the most.

* **Work:** Work would be more fulfilling and less stressful. Employees would be able to use their skills and talents to make a real difference in the world.

* **Leisure:** Leisure time would be more abundant and more enjoyable. People would be able to spend their time doing the things they love, such as traveling, learning, and creating.

Overall, the daily lives of the best of humanity in this hypothetical future would be more fulfilling, more stimulating, and more meaningful. They would have the opportunity to make a real difference in the world, and they would be able to spend their time doing the things they love.

Member discussion